AI Deepfake - Dare to come

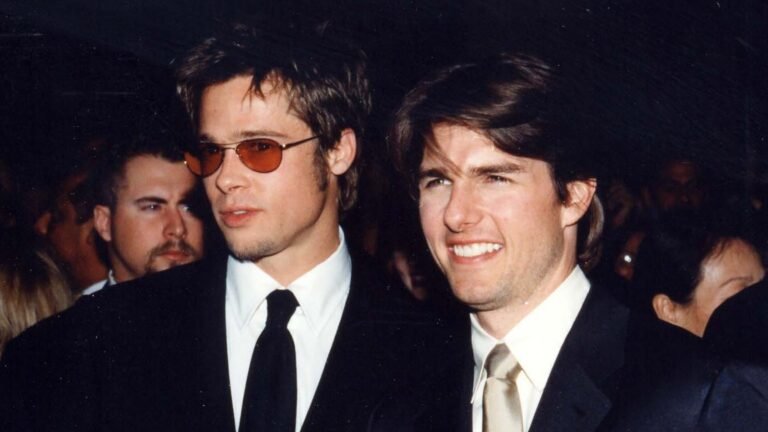

In early 2024, a viral video of a world leader declaring war sent shockwaves across the globe. Panic ensued—until experts exposed it as an AI-generated deepfake. This alarming incident highlights the growing threat of artificial intelligence in manipulating digital content. As deepfake technology advances, its potential for misuse escalates, challenging democracy, privacy, and truth itself. But how did deepfakes evolve, and what can be done to combat their dangers?

The Rise of Deepfake Technology: From Niche Innovation to Global Concern

The term “deepfake” originated in 2017 when a Reddit user shared AI-generated celebrity videos. Initially a niche tool, deepfake technology has rapidly evolved, thanks to machine learning and generative adversarial networks (GANs). Today, free apps like DeepFaceLab enable anyone to create hyper-realistic fake videos with remarkable accuracy.

While deepfakes offer positive applications—such as historical recreations in education and enhancements in filmmaking—their malicious use dominates headlines. A 2023 Reuters report revealed that 90% of viral deepfakes were either politically manipulative or pornographic. The accessibility of deepfake tools has raised concerns about misinformation, identity theft, and digital deception.

How Do AI Deepfakes Work?

Deepfake technology relies on GANs, which consist of two neural networks: a generator and a discriminator. The generator creates fake images, while the discriminator evaluates their authenticity. Through continuous iterations, the generator improves, producing content that is nearly indistinguishable from reality.

Open-source frameworks like TensorFlow and PyTorch facilitate the development of deepfake models. AI tools such as OpenAI’s DALL-E and Stable Diffusion generate lifelike images from text prompts, while voice-cloning software like Resemble.ai can replicate a person’s voice using just a few seconds of audio.

The Impact of Deepfakes on Society

1. Misinformation & Political Manipulation

Deepfakes pose a severe threat to democracy by spreading false narratives. In 2023, a fake video of Ukrainian President Volodymyr Zelenskyy urging surrender circulated online, attempting to demoralize citizens. Similarly, during the 2024 U.S. elections, AI-generated robocalls impersonated political candidates, misleading voters.

2. Financial Fraud & Scams

Deepfake technology is also fueling financial fraud. In 2023, a Hong Kong-based finance worker transferred $25 million to scammers after a video call with a deepfake version of the company’s CFO. According to the FBI, AI-powered fraud surged by 200% year-over-year, with scammers exploiting voice and video clones to deceive victims.

3. Personal & Reputational Harm

Deepfakes disproportionately target women, with over 95% being used to create non-consensual explicit content. High-profile cases, such as deepfake nudes of Taylor Swift being circulated on X (Twitter), highlight the technology’s role in online harassment and digital abuse.

Combating the Deepfake Threat: Detection, Regulation, and Education

1. AI-Powered Detection Tools

Tech companies are developing AI-based detection tools to counter deepfakes. Microsoft’s Video Authenticator and Intel’s FakeCatcher analyze inconsistencies in blinking patterns, lighting, and pixelation to detect fakes. Blockchain-based solutions like Truepic timestamp and verify media authenticity.

2. Legislation & Policy Measures

Regulatory bodies are stepping in to combat deepfake misuse. The European Union’s AI Act mandates watermarking AI-generated content, while U.S. states like California have criminalized the creation of non-consensual deepfakes. However, global enforcement remains inconsistent, highlighting the need for stronger international collaboration.

3. Media Literacy & Public Awareness

Public education plays a crucial role in combating deepfake threats. UNESCO’s 2023 campaign, “Think Before You Share,” educates people on identifying AI-generated content. Experts recommend checking for unnatural eye movements, mismatched audio, and verifying sources before sharing digital media.

The Future of Reality: Can We Restore Trust?

As AI deepfake technology becomes more accessible, experts warn of an impending “infocalypse”—a world overwhelmed by digital deception. However, several solutions offer hope:

- Decentralized Verification: Initiatives like the Content Authenticity Initiative (CAI) promote tamper-proof metadata for digital content.

- Ethical AI Development: Organizations like OpenAI advocate for responsible scaling, limiting access to powerful AI models.

- Public Vigilance: Critical thinking and digital skepticism are essential tools against misinformation. As journalist Claire Wardle states, “The antidote to misinformation isn’t just technology—it’s humility and curiosity.”

Conclusion: Navigating the Blurred Digital Landscape

AI deepfakes have fundamentally altered the way we perceive digital content. The notion that “seeing is believing” is now obsolete. However, through a combination of AI-powered detection, regulatory frameworks, and media literacy, society can mitigate the risks posed by deepfake technology. As we navigate this new digital era, our best defense remains informed skepticism and collective action.